The TL;DR

- Generative AI tools boost productivity, but can leak data

- The risk can come from employees pasting code, uploading IP, sharing non-public financials, etc.

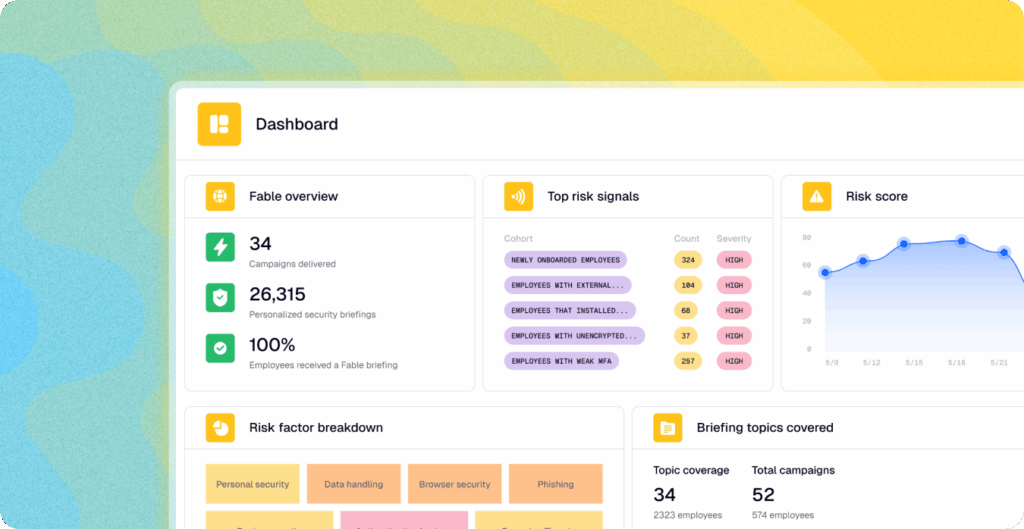

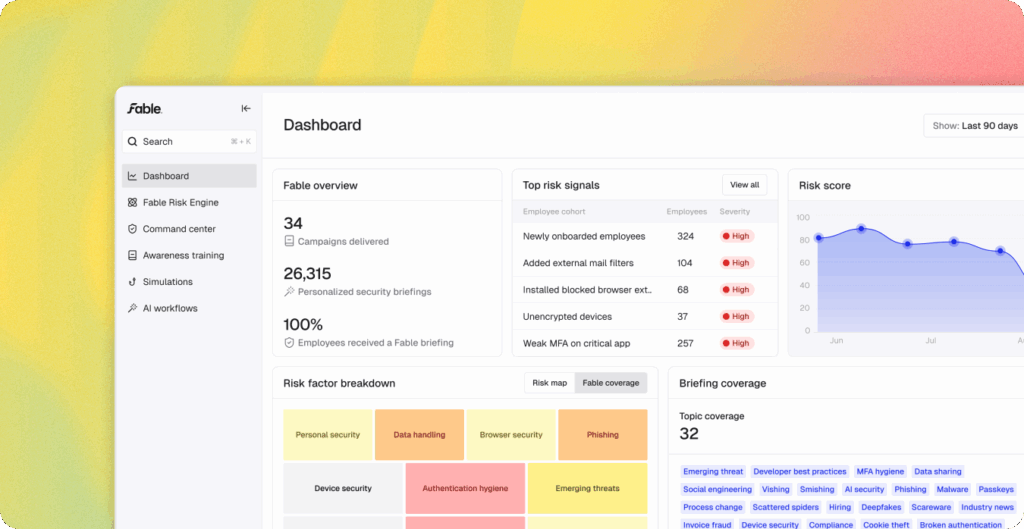

- A human behavior data lakehouse can reveal hidden patterns pointing to these risks

- You can use just-in-time interventions like super-short nudges and briefings to shape behavior

- Here’s a sample of what you might find in the Fable platform

Enterprises are adopting generative AI in a big way. People are using tools like ChatGPT, Gemini, and Claude to speed up coding, polish marketing copy, summarize contracts, and brainstorm ideas. The productivity upside is real, but so are the risks—exposed customer data, source code, intellectual property, non-public financials, protected health information, and more can end up in places you never intended. Unlike traditional cyber threats, these exposures don’t come from an external attacker; they come from everyday employees moving too fast, and maybe not realizing the consequences.

The only way to deal with this risk is to see it clearly. A human behavior data lakehouse like ours ingests signals from your workspace and security stack, normalizes them, and identifies patterns. While your security team may be able to surface some issues from individual tools, they won’t have an easy way to see the behavior across the board, and more importantly, won’t be empowered to intervene—making employees aware and suggesting alternative ways to get their jobs done—while also protecting your sensitive data.

Here are a few examples of what we see in the Fable platform:

IAM (Okta, Azure AD) → who’s adopting and provisioning access to AI

EDR (CrowdStrike, SentinelOne) → endpoint activity such as copy-paste

DLP (Microsoft Purview, Netskope) → sensitive data categorizations

SASE (Netskope, Zscaler) → sensitive data uploads to AI

To name a few.

Beyond simply telling you who’s doing what, a human behavior data lakehouse can connect more dots to give you context, such as people’s role, access, and behavior history, so you know where your risk is most acute and where to concentrate your interventions.

Once you’ve identified the most problematic data-sharing behaviors in your enterprise, you’ll want to take action in the moment using an automated, AI-generated intervention. That may be a quick-and-dirty nudge in Slack or Teams, or a personalized 60-ish-second video briefing referencing the person, their precise behavior, your company’s policy, whatever sanctioned applications you want to guide them to, and any specific calls-to-action you want to make.

Here’s an example of what such an intervention might look like—a free video you can download and use in your company. It’ll give you a taste of our short-and-sweet, highly-effective, AI-generated content. As part of the Fable platform, it would be personalized, targeted, and sent just in time.

Want a briefing tailored to your organization’s tools? Schedule a short demo with us.