The TL;DR

- This report illustrates how organizations measure and reduce human risk

- Targeted, behavior-based interventions outperform broad campaigns

- Behavior change happens faster than many expect, and the best ones stick

- Certain risky behaviors cluster together, creating “toxic combinations”

- Download the full report for metrics, real-world examples, and insights to strengthen your human risk strategy for 2026

Every day, your employees make decisions that impact your cybersecurity posture. Some strengthen your defenses. Others—phishing clicks, sensitive data sharing, outdated passwords, slow system updates, and more—are the invisible behaviors that open the door to exposure.

Until now, when cybersecurity vendors reported on human risk metrics, those metrics were almost always some variation of phishing clicks and security awareness training engagement—hardly a measure of true risk, and certainly not a useful analysis of how to curb it.

Today, we’re excited to share something new: a report on behavior change in human risk. It’s a data-driven look at how organizations measure, understand, and reduce human risk.

Think of it as the human risk version of a Spotify Wrapped (OK, it’s less exciting than music, unless you’re a super data nerd like I am): real metrics and anecdotes from selected anonymized campaigns, plus the signals that defined the year.

This report covers data through October 31, 2025, drawn from anonymized customer environments across industries and maturity levels. It’s the opening chapter in what will become a periodic benchmark for the world of human risk.

What’s in the report

Below is a high-level overview of what we unpacked—each of which will get its own deep-dive post in the coming days. In keeping with the season, we’re calling this blog series the 12 days of riskmas (or, if you prefer, risk-mukah or the non-denominational risk-ivus).

1. The ten most common behaviors driving risk

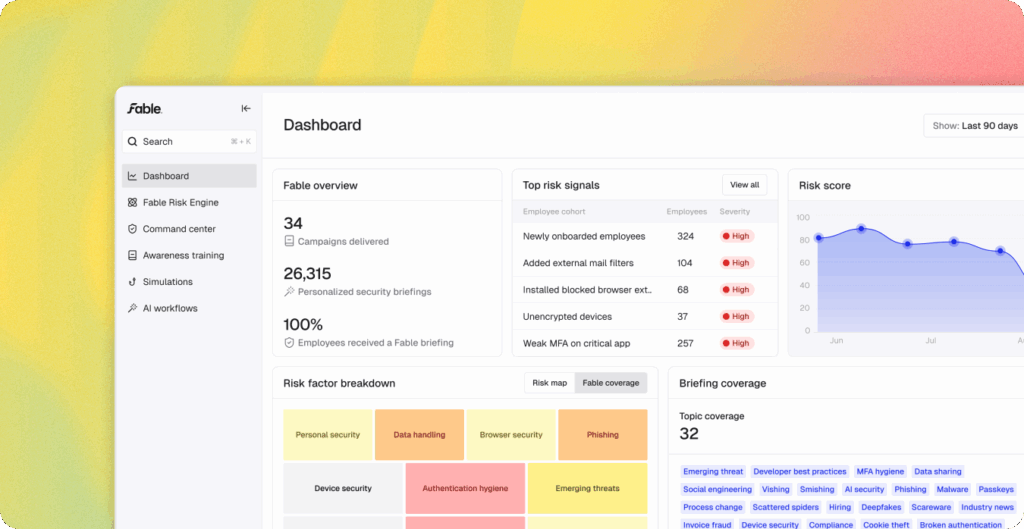

Despite different industries and environments, we continue to see ten behaviors rise to the top—from weak or reused credentials to outdated OS software, unsafe browser extensions, and exposure of sensitive data in generative AI tools.

2. Campaign maturity: from “send-to-all” to ultra-targeted

We organized our customers’ human risk campaigns in three broad categories: 1. general compliance (18%); 2. somewhat targeted, role- or risk-based (38%); and 3. highly-targeted (44%), which aim to shape specific behaviors across identity, cloud, browser, device, and more.

3. Targeting delta: why specific beats spray-and-pray

We compared two nearly identical customer campaigns: one sent broadly and one aimed at a specific audience. The targeted campaign outperformed the broad one by a striking 33 percentage points. Customers can target on many more dimensions, but even this simple distinction shows how powerful targeting can be.

4. Behavior change

True progress isn’t video completions—it’s action. We did a deep-dive into one customer’s OS update campaign, powered by a one-minute video briefing and weekly nudges. It got to 75% and then asymptoted at 99% compliance thereafter. Unlike phishing clicks and training completions, true behavior change is what reduces risk.

5. Time-to-behavior-change (TTBC)

Beyond changing behavior, doing so quickly is critical. We introduce time-to-behavior-change (TTBC), a metric inspired by the popular operations statistic, mean-time-to-remediation (MTTR). Elaborating on the example above, the organization reached 75% compliance in week two and 99% in week five. Depending on what’s at stake, time to be behavior change can be critical in closing your exposure window before an exploit occurs.

6. Cohort comparisons: not all groups behave the same

When we break behavior down by function, the patterns can be eye-opening. In one customer campaign, VIPs clicked phishing links at nearly double the rate of other groups. For any behavior, our customers can show a deep performance profile across their developers, contractors, finance teams, IT help desks, and more.

7. Behavior decay interval

How long does behavior change stick? We introduce the behavior decay interval, which measures the staying power of a human risk campaign—how quickly people revert to old habits.

8. AI swashbucklers

Which groups upload the most content to generative AI tools? Across one customer environment, the technology team led by a long shot, followed by the legal and compliance team. Top uploaded content types included code (60%), documents (26%), media (5%), and other (9%).

9. Toxic combinations

Some risks are dangerous on their own, but become toxic when combined. We define a risk lift measurement for these toxic combinations, where the co-occurrence of two (or more) risks is higher than you’d expect by chance. Focusing on toxic combinations can help security professionals prioritize interventions.

10. Measure what matters—real behavior

No more vanity metrics. Human risk becomes so much more manageable when you look at the actual behaviors that comprise that risk. In this post, we’ll make some recommendations for the right behavior change campaigns to run.

11. Target with precision

One-size-fits-all training is so yesteryear. Targeting matters. Roles matter. Access matters. Risk matters. The more specific the targeting, the faster the organization reduces risk. In this post, we’ll talk about the many ways our customers are targeting their users with training, phishing simulations, and behavioral interventions.

12. Fix the highest-leverage risks first

Not all risks are created equal. What’s at stake matters, and toxic combinations can multiply the risk. We’ll share some examples of how this happens in the real world, and offer advice for where to start.

This report is the beginning of our long-term effort to bring clarity, consistency, and measurable outcomes to human risk. Over the next 11 posts in our 12 days of riskmas series, we’ll unpack each section of the report—and share practical takeaways security teams can use right now. Check in tomorrow (day 1) for a look at the 10 most common human risks.